“It is more rational to sacrifice one life than six.”

– Spock

Consider the following two situations:

Situation # 1: You are among a group of 25 people who are hiding during World War II in the attic of an old country house while a group of enemy soldiers, who have been searching for your group and will kill everyone if they discover you, are on the floor below. The slightest sound from anyone in your group will give you all away. Your baby makes a face as if to cry, and you quickly cup a hand over the baby’s nose and mouth to keep it quiet. You realize that if you takes your hand away, the baby will cry, and your group (including you and the baby) will be discovered and killed, but if you keep your hand in place, the baby will suffocate and die, but everyone else will survive. What should you do?

Situation #2: You are a participant in an experiment of the following nature: There is a revolving $10 pot and another person will repeatedly offer you an amount varying from $1 to $9 from that pot, keeping the rest for himself. If you refuse any given offer, you both get nothing. The size or number of offers you refuse will have no affect on subsequent offers. You will never encounter this person ever again. Here’s what the experiment looks like:

What is the lowest offer you should accept?

Both of the above dilemmas suggest difficult but somewhat obvious utilitarian choices (utilitarian=providing the maximal benefits for all). If you’re thinking rationally (like Spock), you should kill the baby to save the group of 25. And in Situation #2, you should accept any and all offers, even if you’re only unfairly offered $1 (while the giver keeps $9), because something is better than nothing. However, perhaps not surprisingly, humans often don’t make the rational decisions in situations like these, and often rely on emotional responses to lead them towards rationally incorrect choices. I will discuss the two psychological mechanisms by which decisions such as these are made, offer some compelling examples showing how neuroscience is shedding new light on the science of moral decision making and discuss the real-life implications of this research.

The Role of Emotion and Reason in Human Decision Making

Philosophy has long been concerned with understanding the basis for human morality. Rationalist philosophers, such as Plato and Kant, characterized moral judgment making as a rational exercise, based upon deductive reasoning and cost-benefit analysis. Contrastingly, philosophers such as David Hume and Adam Smith held that automatic emotional responses played a primary role in moral judgments. And although many contemporary psychologists and philosophers have continued to favor one position over the other, modernists are increasingly integrating these two views, suggesting that moral decisions are the result of the confluence of both fast, automatic emotional responses and controlled, deliberative reasoning. In recent years, psychologists have been using the tools of neuroscience – namely, fMRI or functional magnetic resonance imaging – to help better understand the mental processes underlying such “dual process” models.

In order to examine how these two systems interact in decision making, we’ll look at two experiments.

The first experiment utilizes fMRI to shed light on the conditions under which we might engage emotion vs. reason when making a moral judgment. The second experiment involves patients who have suffered brain damage in a region of the brain known to play a role in social emotional responding, and we’ll look at how this type of localized damage can actually lead to better decisions (in a utilitarian sense) in some moral decision tasks.

Moral Decisions and the Trolley Dilemma

The first experiment we’ll discuss comes from a problem originally discussed by philosophers Phillipa Foot and Judith Jarvis Thompson, known as the “trolley” problem, which presents the following two dilemmas:

(1) A train is speeding down some tracks. You glance ahead and notice that there are 5 people working on the track who don’t see the train coming and will be killed. However, there is a switch within your reach, which, if you pull it, will switch the train to another set of tracks, saving those five people but killing one person working on the other track. Is it ok to pull the switch?

(2) Again, the trolley is headed for five people. You are standing next to a man on a footbridge overlooking the tracks and if you push him off the bridge and in front of the train, it will cause the train to stop, saving the five. Is it ok to push the man off of the bridge?

Most people say yes to #1 (its ok to pull the switch) but no to #2 (not ok to push the man), even though the situation are identical in a utilitarian sense. The presents a puzzle: Why is it the case that most people make those decisions?

And what kind of mental calculus are people doing in order to consistently make these choices?

According to Harvard psychologist Josh Greene, the difference lies in the emotional responses we experience to either case. In the track switching case, our role in the man’s death is somewhat passive. He happens to be on the other track and we happen to pull the switch that directs the train to the track, but we’re not directly involved in his death. It doesn’t feel wrong – or at least not wrong enough to overwhelm our rational analysis of the situation (which is that 5 lives are worth more than one.) Greene refers to this scenario as the “impersonal case.”

The footbridge situation – ”the personal case” – is quite a different scenario. We’re playing an active role in the man’s death; the idea of pushing the man certainly “feels” more wrong than the idea of pulling the switch. I would venture to say that for many people it feels a little like murder and would evoke an extremely negative emotional response. This emotional response would seem likely to drive the subsequent decision to obey the rational calculus of the situation and let the man pass on by. This explanation, if correct, offers some specific predictions regarding the neural networks that should be actively driving these decisions. Greene ran people through the experimental paradigm while they were in the scanner and found the following:

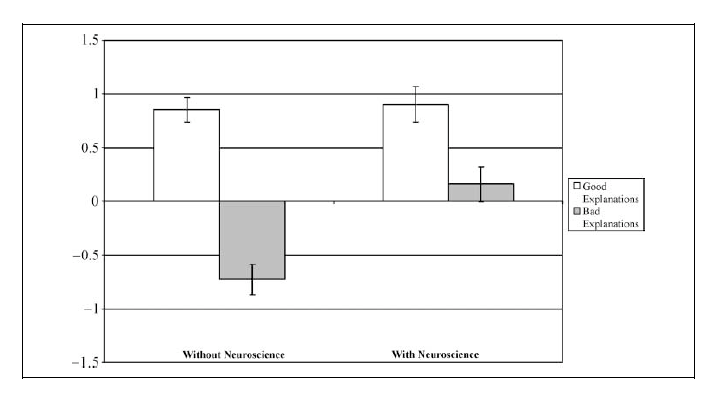

– In cases where people said it was ok to pull the switch, active brain regions were those involved in deliberate reasoning (the dorsal lateral prefrontal cortex (DLPFC), and the inferior parietal lobule). In other words, in order to make the decision to pull the switch, people seemed to engage deliberate, rational thought processes.

– In cases where people said it wasn’t ok to push the man, active regions were those involved in the processing of social emotions (the medial prefrontal cortex (MPFC), and the posterior cingulate). The thought of pushing someone in front of a trolley seemed to evoke a strongly negative emotional response that drove moral dissaproval of the act.

But perhaps the most interesting finding was revealed in the brain data of those subjects who decided it was ok to push the man off of the bridge. Greene (2004) found that the selection of a more utilitarian choice revealed a conflict between the “rational” and “emotional” systems (I’m quoting the terms “rational” and “emotional” because these are not entirely distinct systems in functional terms, but rather quite interconnected). Additionally, he found activation in the anterior cingulate cortex, an area in which activation is thought to reflect conflict between competing brain processes. In other words, the thought of pushing someone in front of a trolley would evoke a strongly negative emotional response, which would then have to be overridden in order to the make the more utilitarian or rational response.

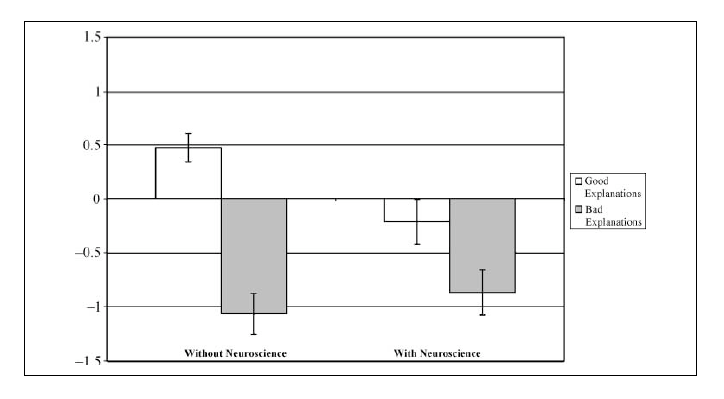

Again, most people chose not to push the man over the footbridge. Greene’s experiment strongly suggests that it was activation in brain regions known to be active during emotional responding that are responsible. But an even better test of whether a particular brain region is responsible for a given behavior is to look at people who have damage to that area of the brain. That is, would people with damage in the “social emotional” centers of the brain be more likely to push the man off of the bridge? A group of Italian researchers (Moretto 2009) recently recruited people with damage to a region of the brain active during social emotional responding (the vmPFC) to test Greene’s claim.

Vmpfc damaged brains above (orange highlights show the focal point of the damage).

These patients participated in the same task as in Experiment #1 (the trolley task). And as predicted, brain damaged patients more often chose to push the man off the bridge to save the five people on the tracks. This is presumably because they didn’t experience an emotional signal (which would normally have been reflected via activity in the mPFC) that would prevent them from endorsing such an action, thereby allowing deliberative mental processes to reign supreme. Simply put, these patients’ lack of emotional response led them to the more rational choice. Does this data suggest mean that people with vmPFC damage are generally better equipped to make more rational moral decisions? Although this kind of brain damage does sometimes result in the kinds of limited benefits described herein, it also leads to a much larger set of deficits, including difficulties making economics decisions as well as a tendency to exhibit exaggerated anger, irritability, emotional outbursts and tantrums, particularly in social situations involving frustration or provocation. People with this kind of brain damage also often have difficulty making sound economic decisions (Damasio 1994).

Real Life Implications

One of the biggest challenges inherent to experimental psychology lies in the push and pull between naturalism and control (the more control we impose upon the conditions of the experiment in order to isolate the variable of interest, the less “ecologically valid” the results are likely to be.) The moral choices people make from the comfort of a psychology lab may or may not replicate out in the real world. But naturalism is not the most important aspect of the trolley problem. What is important is the mental processes that underlie two conditions which differ not in terms of their rational calculus, but only in the personal role we play in the outcome. Consider the case of Flight 93, the ill-fated plane that crashed into a Pennsylvania field on 9-11. The White House was notified about the possible hijacking about 50 minutes before the plane came down. And in that time period, a difficult decision was made. In an interview with Tim Russert shortly after 9-11, vice president Dick Cheney talked about the decision:

Yes. The president made the decision … that if the plane would not divert … as a last resort, our pilots were authorized to take them out. Now, people say, you know, that’s a horrendous decision to make. Well, it is. You’ve got an airplane full of American citizens, civilians, captured by … terrorists, headed and are you going to, in fact, shoot it down, obviously, and kill all those Americans on board?”

And although this decision is difficult to argue against, it was certainly still a difficult one to make. As in the trolley problem, it requires that rational, deliberative thinking take precedence over the negative and aversive emotion, the revulsion and guilt, that most people would feel if forced to play an active role in the death of one (or several) innocent humans. And although the president and his cronies had to make the decision “on paper”, the fact of the crash obviated the need to actually issue the order to shoot the plane down. In this sense, the incident resembles a lab experiment in which decisions aren’t actually enacted but are merely theoretical. The tougher decision would have come later, with the order to fire. Furthermore, imagine the intense internal conflict for the pilot who would have been ordered to actually pull the trigger. It’s a reminder why military organizations train their soldier to blindly follow orders. A soldier is optimally neither rationally analyzing the moral parameters of nor reacting emotionally to any given situation, but only acting upon his orders. If a military commander could peer into the brains of a soldier faced with such a dilemma, he would ideally want to see none of the brain activity found in Greene’s conflicted rationalizers.

So what does this all mean for you and me? For one, its should serve as a reminder that there are times when we’ll arrive at the optimal solution to some moral quandary not by “trusting our gut” but rather deliberately and rationally working through a problem. We need to develop a healthy distrust of our common sense instincts, as they can often mislead us. But that’s not to say that rational deliberation will always lead us to respond more accurately to moral challenges. On the contrary, sometimes the emotional system is exactly the tool for the job. After all, the Enterprise couldn’t have survived without both Spock and Kirk.

Greene, J., Nystrom, L., Engell, A., Darley, J., & Cohen, J. (2004). The Neural Bases of Cognitive Conflict and Control in Moral Judgment Neuron, 44 (2), 389-400 DOI: 10.1016/j.neuron.2004.09.027

Moretto, G., Làdavas, E., Mattioli, F., & di Pellegrino, G. (2010). A Psychophysiological Investigation of Moral Judgment after Ventromedial Prefrontal Damage Journal of Cognitive Neuroscience, 22 (8), 1888-1899 DOI: 10.1162/jocn.2009.21367

Damasio, A.R. (1994). Descartes’ error: Emotion, Reason and the human brain. New York: G.P. Putnam’s Sons.